How To Draw A Decision Tree

Automobile Learning - Conclusion Tree

Determination Tree

In this chapter we will show you how to make a "Decision Tree". A Determination Tree is a Flow Chart, and can help you make decisions based on previous experience.

In the case, a person will try to decide if he/she should go to a comedy testify or not.

Luckily our example person has registered every time at that place was a comedy show in town, and registered some information about the comedian, and also registered if he/she went or not.

| Age | Experience | Rank | Nationality | Become |

| 36 | 10 | 9 | Britain | NO |

| 42 | 12 | 4 | USA | NO |

| 23 | 4 | 6 | N | NO |

| 52 | four | 4 | U.s. | NO |

| 43 | 21 | 8 | The states | YES |

| 44 | 14 | five | Great britain | NO |

| 66 | iii | seven | N | Yep |

| 35 | 14 | 9 | Britain | YES |

| 52 | 13 | 7 | Northward | Yes |

| 35 | 5 | 9 | Northward | Aye |

| 24 | 3 | 5 | USA | NO |

| eighteen | three | 7 | Britain | YES |

| 45 | 9 | 9 | UK | YES |

At present, based on this information set, Python can create a decision tree that can exist used to decide if any new shows are worth attending to.

How Does it Work?

First, import the modules y'all need, and read the dataset with pandas:

Example

Read and print the data set up:

import pandas

from sklearn import tree

import pydotplus

from sklearn.tree import DecisionTreeClassifier

import matplotlib.pyplot every bit plt

import matplotlib.epitome as pltimg

df = pandas.read_csv("shows.csv")

print(df)

Run case »

To brand a conclusion tree, all data has to be numerical.

We accept to convert the non numerical columns 'Nationality' and 'Get' into numerical values.

Pandas has a map() method that takes a lexicon with information on how to catechumen the values.

{'UK': 0, 'Us': i, 'N': 2}

Means convert the values 'Britain' to 0, 'U.s.' to 1, and 'N' to two.

Example

Change string values into numerical values:

d = {'Britain': 0, 'USA': 1, 'N': 2}

df['Nationality'] = df['Nationality'].map(d)

d = {'YES': 1, 'NO': 0}

df['Get'] = df['Become'].map(d)

print(df)

Run case »

Then we have to separate the characteristic columns from the target column.

The characteristic columns are the columns that nosotros try to predict from, and the target column is the cavalcade with the values we try to predict.

Example

Ten is the feature columns, y is the target column:

features = ['Age', 'Experience', 'Rank', 'Nationality']

X = df[features]

y = df['Go']

print(X)

print(y)

Run example »

Now nosotros can create the actual decision tree, fit it with our details, and save a .png file on the computer:

Case

Create a Decision Tree, save it every bit an image, and evidence the image:

dtree = DecisionTreeClassifier()

dtree = dtree.fit(X, y)

data = tree.export_graphviz(dtree, out_file=None, feature_names=features)

graph = pydotplus.graph_from_dot_data(data)

graph.write_png('mydecisiontree.png')

img=pltimg.imread('mydecisiontree.png')

imgplot = plt.imshow(img)

plt.show()

Run example »

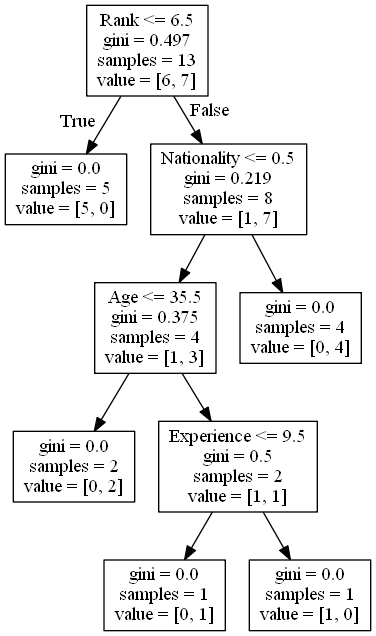

Result Explained

The determination tree uses your before decisions to summate the odds for you to wanting to go see a comedian or not.

Let us read the different aspects of the decision tree:

Rank

Rank <= half-dozen.five means that every comedian with a rank of half dozen.5 or lower will follow the True arrow (to the left), and the remainder will follow the False arrow (to the correct).

gini = 0.497 refers to the quality of the divide, and is e'er a number betwixt 0.0 and 0.5, where 0.0 would hateful all of the samples got the same consequence, and 0.five would hateful that the separate is done exactly in the center.

samples = xiii means that there are thirteen comedians left at this point in the determination, which is all of them since this is the first step.

value = [half-dozen, 7] means that of these 13 comedians, 6 volition become a "NO", and 7 will become a "GO".

Gini

In that location are many ways to separate the samples, we utilise the GINI method in this tutorial.

The Gini method uses this formula:

Gini = 1 - (10/n)ii - (y/northward)2

Where ten is the number of positive answers("GO"), n is the number of samples, and y is the number of negative answers ("NO"), which gives us this calculation:

1 - (seven / 13)2 - (vi / xiii)2 = 0.497

The next step contains two boxes, i box for the comedians with a 'Rank' of 6.5 or lower, and one box with the rest.

True - 5 Comedians Stop Here:

gini = 0.0 ways all of the samples got the same issue.

samples = v means that there are v comedians left in this branch (5 comedian with a Rank of half dozen.5 or lower).

value = [5, 0] ways that 5 will get a "NO" and 0 will become a "Become".

False - 8 Comedians Keep:

Nationality

Nationality <= 0.5 means that the comedians with a nationality value of less than 0.5 will follow the arrow to the left (which ways everyone from the United kingdom, ), and the rest will follow the arrow to the right.

gini = 0.219 means that most 22% of the samples would go in one management.

samples = 8 ways that in that location are viii comedians left in this branch (8 comedian with a Rank higher than 6.5).

value = [i, 7] means that of these viii comedians, 1 will get a "NO" and seven will get a "Go".

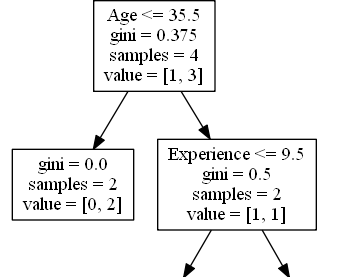

Truthful - 4 Comedians Continue:

Age

Age <= 35.5 means that comedians at the age of 35.5 or younger volition follow the pointer to the left, and the rest will follow the pointer to the right.

gini = 0.375 ways that almost 37,5% of the samples would go in i direction.

samples = four means that at that place are 4 comedians left in this branch (4 comedians from the United kingdom of great britain and northern ireland).

value = [1, 3] means that of these 4 comedians, 1 will go a "NO" and 3 volition become a "GO".

False - 4 Comedians Stop Here:

gini = 0.0 ways all of the samples got the same result.

samples = four means that there are 4 comedians left in this co-operative (4 comedians non from the United kingdom).

value = [0, 4] means that of these 4 comedians, 0 will go a "NO" and 4 volition get a "Get".

True - 2 Comedians Finish Hither:

gini = 0.0 ways all of the samples got the same result.

samples = 2 means that at that place are 2 comedians left in this branch (ii comedians at the age 35.5 or younger).

value = [0, 2] ways that of these ii comedians, 0 will get a "NO" and 2 volition get a "Become".

False - two Comedians Continue:

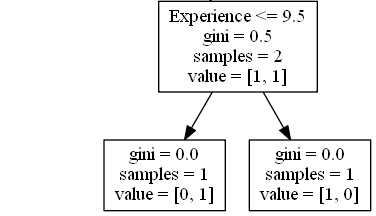

Experience

Experience <= 9.5 means that comedians with 9.5 years of experience, or less, volition follow the arrow to the left, and the balance volition follow the arrow to the right.

gini = 0.five means that fifty% of the samples would go in one management.

samples = 2 ways that at that place are 2 comedians left in this branch (2 comedians older than 35.5).

value = [1, 1] ways that of these ii comedians, one will get a "NO" and 1 will go a "GO".

True - 1 Comedian Ends Here:

gini = 0.0 means all of the samples got the same outcome.

samples = one means that there is one comedian left in this branch (1 comedian with nine.5 years of experience or less).

value = [0, 1] means that 0 will go a "NO" and 1 volition get a "Get".

False - 1 Comedian Ends Hither:

gini = 0.0 means all of the samples got the aforementioned result.

samples = i means that there is i comedians left in this branch (1 comedian with more nine.five years of experience).

value = [1, 0] means that 1 volition become a "NO" and 0 will become a "GO".

Predict Values

We can utilize the Decision Tree to predict new values.

Instance: Should I go run across a show starring a forty years old American comedian, with x years of feel, and a comedy ranking of seven?

Example

Utilize predict() method to predict new values:

impress(dtree.predict([[40, x, 7, i]]))

Run instance »

Example

What would the reply be if the comedy rank was 6?

print(dtree.predict([[xl, 10, 6, one]]))

Run example »

Different Results

You lot volition see that the Decision Tree gives you different results if you run it enough times, fifty-fifty if y'all feed it with the aforementioned information.

That is considering the Determination Tree does not requite us a 100% certain respond. It is based on the probability of an outcome, and the answer will vary.

Source: https://www.w3schools.com/python/python_ml_decision_tree.asp

Posted by: taylorthue1949.blogspot.com

0 Response to "How To Draw A Decision Tree"

Post a Comment